At the ARIA launch event, we had a great keynote by Julian Jara-Ettinger. He talked about his work exploring how people establish understanding, connection, and communication with each other. It was a rich and nuanced talk that showed (with some fascinating experiments) how we build models of others in our head, how we communicate outside of language, and how social cues help us build an understanding and context for what’s being communicated.

In the same vein, Janet’s talk went deep into the details of team-machine interactions in two NASA projects, with nuance, fun anecdotes, and a lot of texture that illustrates the real creativity that goes into making these hybrid systems work in very distinct ways.

Current AI “Discourse” has none of this. It has no nuance, no science, and no imagination.

Anyone who listened to Julian’s talk would come away with a deep appreciation for the sophisticated way in which people communicate verbally, non-verbally, and in an embodied way, with each other. And would be astonished at the presumption that current LLMs could capture any of that.

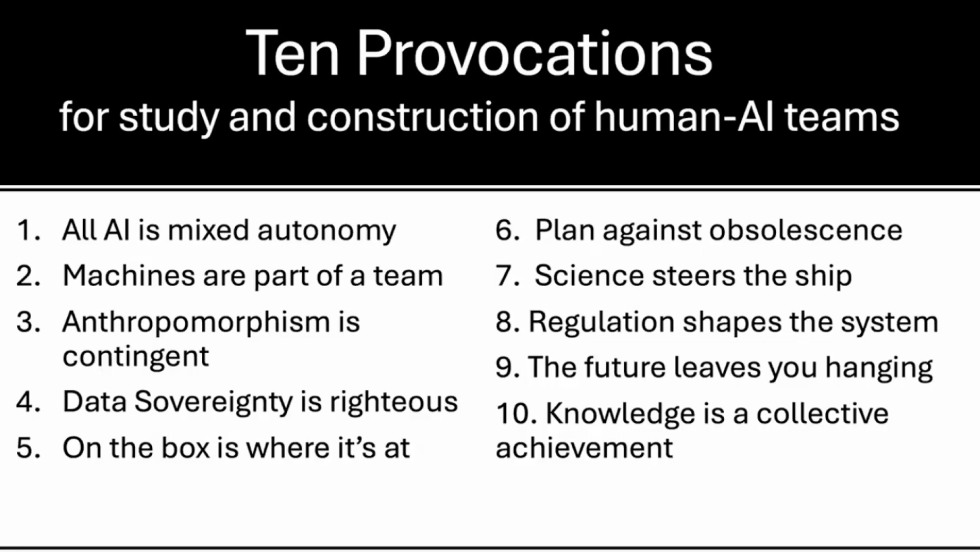

Anyone who listens to Janet’s talk would come away with a deep appreciation for the sophistication and subtlety with which teams of people organize in very different ways to use technology to carry out some shared mission and purpose. And would be astonished at the poverty of imagination in the shouts of “Agents!” “Chatbots” “Assistants” that echo across the tech landscape.

What gets me most excited about the moment we are in is the sense of openness and possibility - that we can take all these shiny new AI tools into a mental garage and tinker around to come up with all kinds of crazy ideas. What gets me most depressed is that in order to do this we have to rise above, or hunker down below (pick your favorite direction), the clamor of BS around specific products that specific companies need to be able to sell to justify even more investment. And that this clamor is also forcing misguided structural changes in how we organize (or refuse to organize) society.

Real innovation is not coming from those selling us pre-canned shrink-wrapped products. It’s coming and will come from those who have the courage to imagine new ways of working with and using AI tools that let us – people – drive, rather than be driven.

1Janet is the reason I can blithely go around saying ‘Latour’, and “ANT”, and almost know what I’m saying. It’s like a secret handshake that gets me into cool STS clubs (ok not that cool, but definitely full of lots of complicated words).